ARMADA: Augmented Reality for Robot Manipulation and Robot-Free Data Acquisition

Abstract

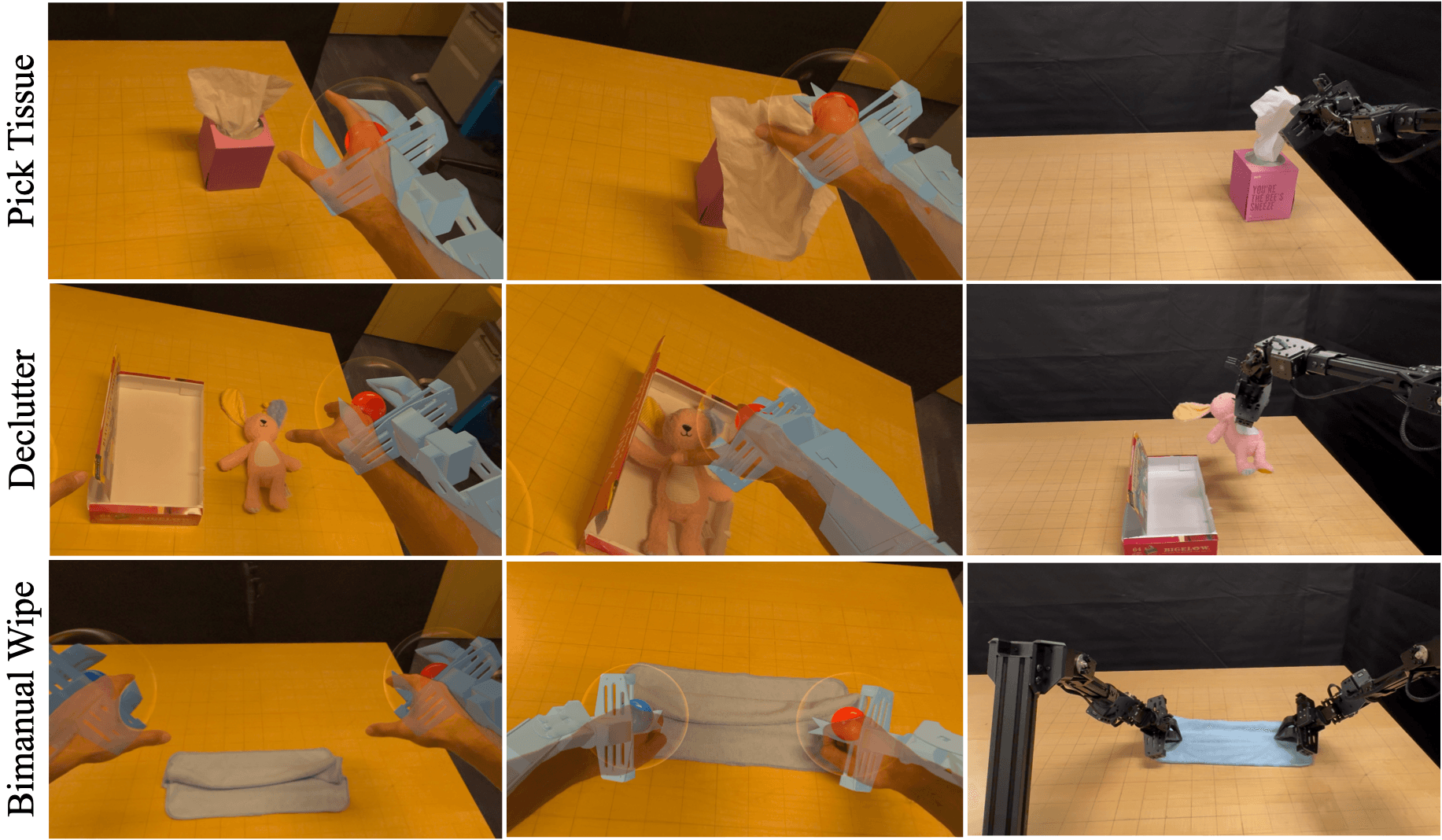

Teleoperation for robot imitation learning is bottlenecked by hardware availability. Can high-quality robot data be collected without a physical robot? We present a system for augmenting Apple Vision Pro with real-time virtual robot feedback. By providing users with an intuitive understanding of how their actions translate to robot motions, we enable the collection of natural barehanded human data that is compatible with the limitations of physical robot hardware. We conducted a user study with 15 participants demonstrating 3 different tasks each under 3 different feedback conditions and directly replayed the collected trajectories on physical robot hardware. Results suggest live robot feedback dramatically improves the quality of the collected data, suggesting a new avenue for scalable human data collection without access to robot hardware.

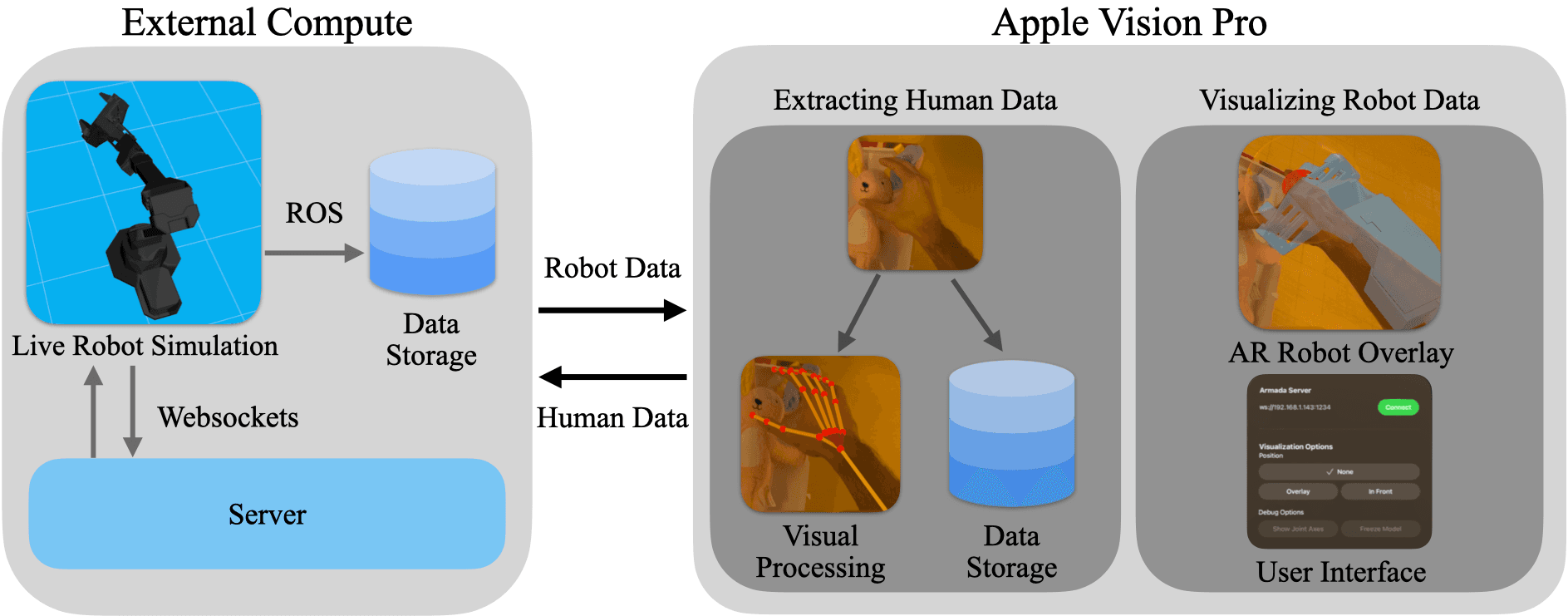

System Architecture

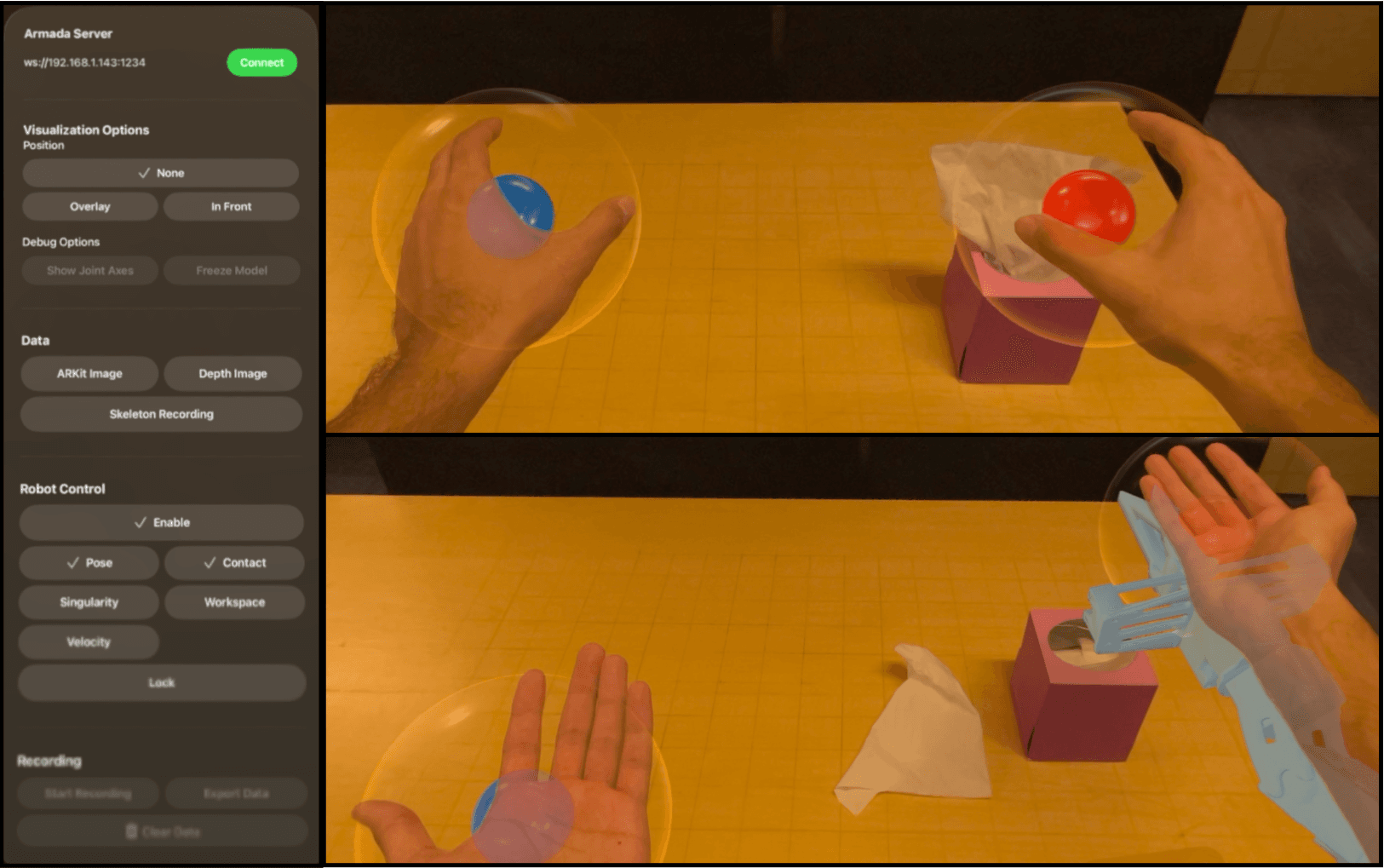

User Interface

User Study

Outlook

By enabling in-the-wild data collection from anyone with a Vision Pro, ARMADA may facilitate the creation of large datasets with tens of thousands of hours of manipulation data. Such datasets may enable imitation learning at unprecedented scale, an essential ingredient for generalization across tasks, environments, and robot hardware

BibTeX Citation

Contact

If you have any questions, please feel free to contact Nataliya Nechyporenko or Ryan Hoque.